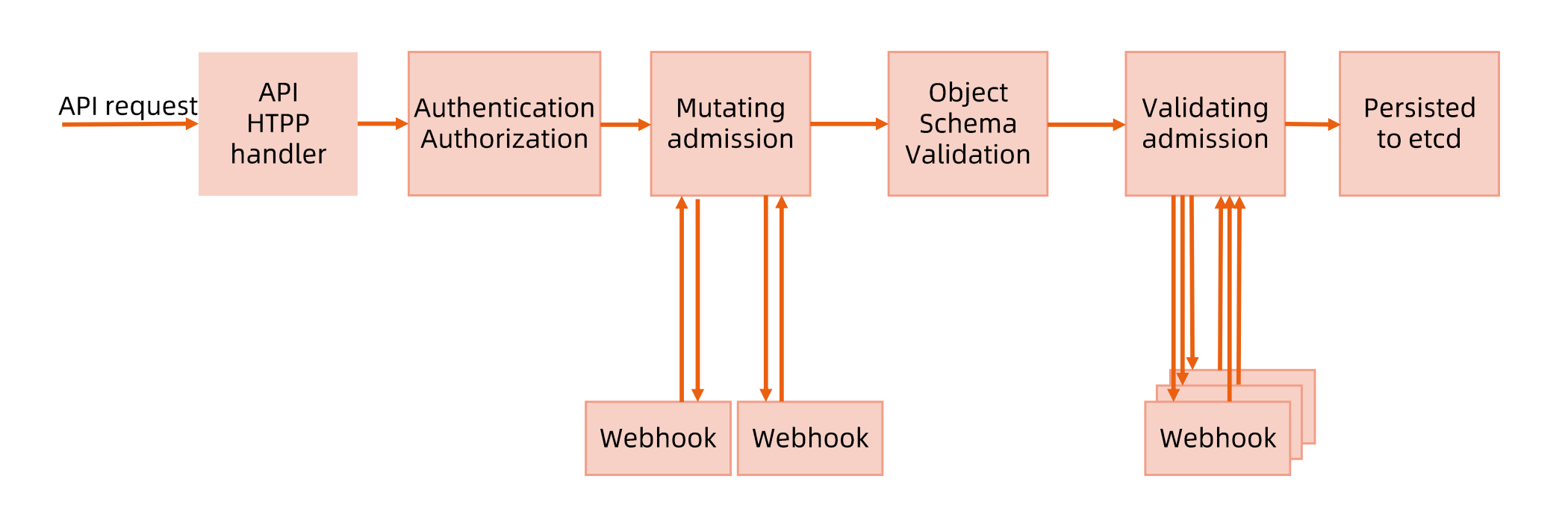

API server 访问控制概览 #

- Authentication: 用于认证请求用户身份

- Authorization:用于校验请求用户的权限

- Mutating admission: 针对请求做一些变形(例如默认值的注入)

- Schema validation: 针对变形后的最终数据做校验

- Validating admission: 自定义的校验机制

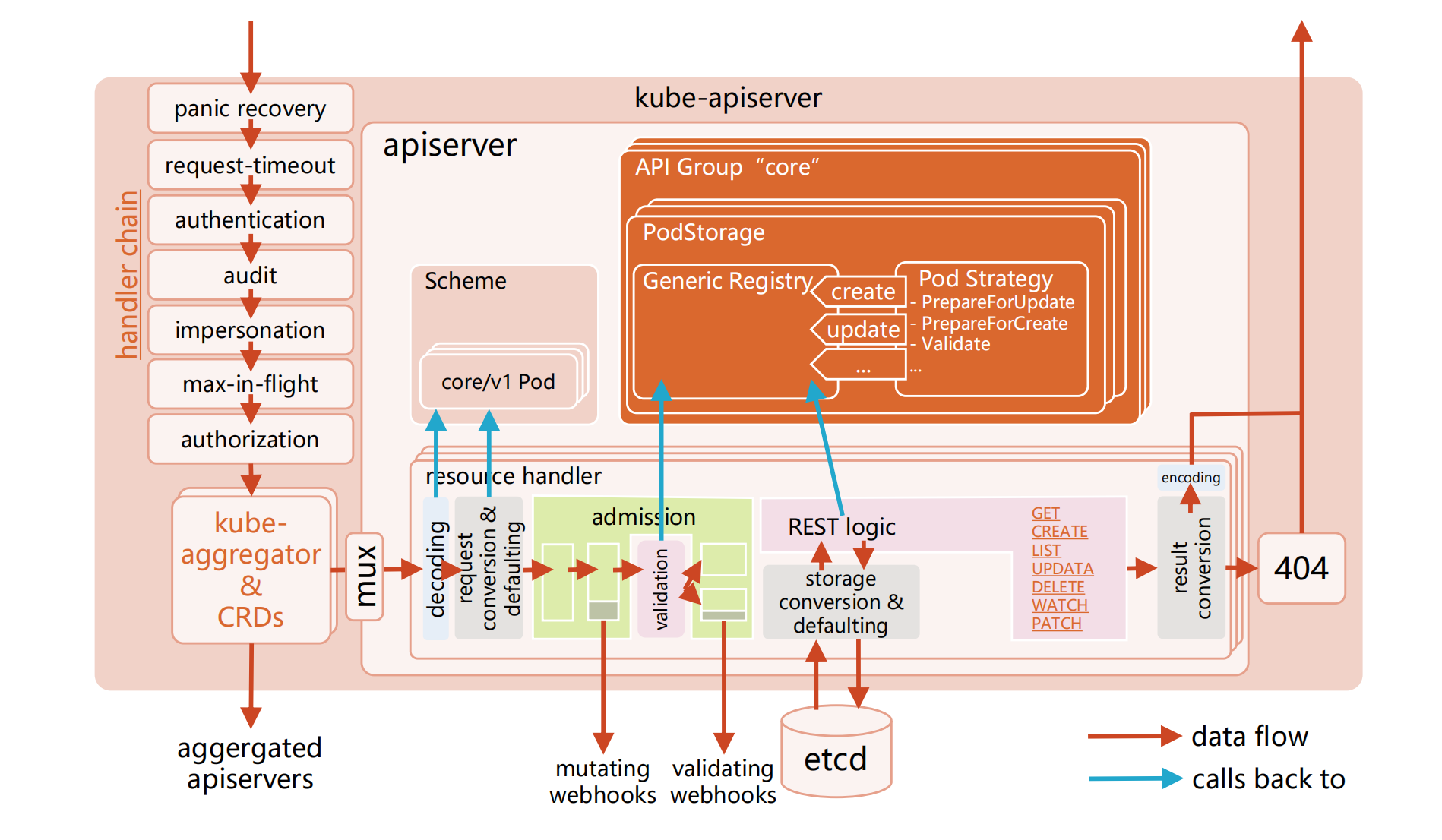

- panic recovery: 全局的异常捕获

- request-timeout: 请求的超时时间设置,防止请求一直处于响应状态

- audit: 审计功能

- impersonation: 用于在request的header中记录真实请求的用户(一般在集群联邦中存在应用场景,集群联邦发下的request一般使用了root的kube config,使用impersionation会把真实的用户信息填在request的header中,交由member的apiserver来进行权限校验)

- max-in-flight:限流

- kube-aggregator:判断request是否是标准的k8s对象,如果不是,可以转发到特定的apiserver(用作代理)

认证机制 #

- X509 证书

- api server启动时配置:

—client-ca-file=SOMEFILE。在证书认证时,其CN域用作用户名,而组织机构域用作group名。

- api server启动时配置:

- 静态token文件

- api server启动时配置:

—token-auth-file=SOMEFILE - 该文件位csv格式,每行至少包括三列token,username,user id

- api server启动时配置:

- 引导token

- 为了支持平滑地启动引导新的集群,k8s包含了一种动态管理的持有者令牌类型(引导启动令牌)

- 已secret的形式保存在kube-system名字空间中

- 控制器管理器包含的TokenCleaner控制器能够在启动引导令牌过期时将其删除

- 在使用kubeadm部署时,可以通过

kubeadm token list命令查询

- 静态密码文件

- api server启动时配置:

—basic-auth-file=SOMEFILE - 该文件位csv格式,每行至少包括三列password,username,user id

- api server启动时配置:

- ServiceAccount

- ServiceAccount是k8s自动生成的,并会自动挂载到容器中

/run/secrets/kubernetes.io/serviceaccount目录中

- ServiceAccount是k8s自动生成的,并会自动挂载到容器中

- OpenID

- OAuth 2.0的认证机制

- webhook令牌身份认证

- —authentication-token-webhook-config-file:指向其中一个配置文件,来描述如何访问远程的webhook服务

- —authentication-token-webhook-cache-ttl:用来设定身份认证决定的缓存时间。默认2分钟

静态token认证 #

生成静态token

cncamp-token,cncamp,1000,"group1,group2,group3"备份原始kube-apiserver.yaml

cp /etc/kubernetes/manifests/kube-apiserver.yaml ~/kube-apiserver.yaml替换apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.56.2:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.56.2

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --token-auth-file=/etc/kubernetes/auth/static-token

image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.56.2

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 192.168.56.2

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 192.168.56.2

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/ca-certificates

name: etc-ca-certificates

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /usr/local/share/ca-certificates

name: usr-local-share-ca-certificates

readOnly: true

- mountPath: /usr/share/ca-certificates

name: usr-share-ca-certificates

readOnly: true

- mountPath: /etc/kubernetes/auth

name: auth-files

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/ca-certificates

type: DirectoryOrCreate

name: etc-ca-certificates

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /usr/local/share/ca-certificates

type: DirectoryOrCreate

name: usr-local-share-ca-certificates

- hostPath:

path: /usr/share/ca-certificates

type: DirectoryOrCreate

name: usr-share-ca-certificates

- hostPath:

path: /etc/kubernetes/auth

type: DirectoryOrCreate

name: auth-files访问

root@master01:~/101/module6/basic-auth# curl https://192.168.56.2:6443/api/v1/namespaces/default -H "Authorization: Bearer cncamp-token" -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "namespaces \"default\" is forbidden: User \"cncamp\" cannot get resource \"namespaces\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"name": "default",

"kind": "namespaces"

},

"code": 403

}X509验证 #

生成key

openssl genrsa -out myuser.key 2048生成csr

openssl req -new -key myuser.key -out myuser.csrbase64 编码

root@master01:~/101/module6/basic-auth# cat myuser.csr | base64 | tr -d "\n"

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ3lUQ0NBYkVDQVFBd2dZTXhDekFKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJREFoVGFHRnVaMmhoYVRFUgpNQThHQTFVRUJ3d0lVMmhoYm1kb1lXa3hEekFOQmdOVkJBb01CbTE1ZFhObGNqRVBNQTBHQTFVRUN3d0diWGwxCmMyVnlNUTh3RFFZRFZRUUREQVp0ZVhWelpYSXhHekFaQmdrcWhraUc5dzBCQ1FFV0RHMTVkWE5sY2tCbkxtTnYKYlRDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTU9nTjFJd0VMejUxUzRMWi8vQwordzFVeXJHNHU2eURBR0gyWmZBV1JSVXFqQU8zTnJMN0hXdDJFbHJFeEdjcFpqS0dBazRPK0wzQXB1d2R6dFRtCkZ6V2RXUXVWZXA5S21VbWUzTjVpc3dFU0R3SVRXUXNxTys2OUlWa0hyN1hFMEJZeHRNMXhIYVRKZVVJZDFTazgKZUZJYitCTDVOOG8zYmJKMXJJL2hFQnppS2tXZE8xaVFtZTI3T2RPZTA5TGdRRXBvS2tzeldOSzVvcWQrOElGWQpaUWdxalhvRDBsVDVWeHBVS2l0YVlPclBRUXoxNXVGMStXV1dCNThiQ3FsdFF4WUVUSG0rZjVCWTcvWFJLS0tMCmV3blBMUE5BSnpXcWhRZ0p1cHZyMjFOYUxlSEhLaXQwS0dHODhtR0N0bnRZY2QrNlp2YzdTblBxSy9YRStGRk0KeWNjQ0F3RUFBYUFBTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFCWkdxYTRnWkU1Qlh6K2N0c0Y5RHc1WGJYYgpDOW9sTit6SXFrOVFXSXhNQldvcnlsRk96Rllhb2lMb1luWi9waU1tZWtBdTkyQTh1Z1N0b1hGRWRmNFQwNDdLClFsWlcvK0NvL0xrTHR6dlZ4UCtxaE94WFVFNlVIZlZJdXhoTlpKU0NSbkhDdFowOExlK1U1OElHTEo1Zm13YXkKSk53KzJkelljak8zSmM2TFoxKzI5b3l2WVB0VFEzUEFsaXJLMzBPcnE3ZzFKd3JOSVdOeGR6NmwvOEtsVk1HWgpXbDVsSDdubEYxdDg5cTBGNUc3c0FrSmE4OVpwZ1M4TnNFcGlOc0RhNUxpM1JxR3FGbXZ1S3dYQlAveG1pcVdyCmdUWUJuSGRyQ2VGVjFHNnlhTFpsSE9xS0xOa002SUd2MUJ0b3A3M1pDOFd3Q3dMSU9oRTNiN1JKZDJvQgotLS0tLUVORCBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0K生成csr对象

root@master01:~/101/module6/basic-auth# cat <<EOF | kubectl apply -f -

> apiVersion: certificates.k8s.io/v1

> kind: CertificateSigningRequest

> metadata:

> name: myuser

> spec:

> request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ3lUQ0NBYkVDQVFBd2dZTXhDekFKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJREFoVGFHRnVaMmhoYVRFUgpNQThHQTFVRUJ3d0lVMmhoYm1kb1lXa3hEekFOQmdOVkJBb01CbTE1ZFhObGNqRVBNQTBHQTFVRUN3d0diWGwxCmMyVnlNUTh3RFFZRFZRUUREQVp0ZVhWelpYSXhHekFaQmdrcWhraUc5dzBCQ1FFV0RHMTVkWE5sY2tCbkxtTnYKYlRDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTU9nTjFJd0VMejUxUzRMWi8vQwordzFVeXJHNHU2eURBR0gyWmZBV1JSVXFqQU8zTnJMN0hXdDJFbHJFeEdjcFpqS0dBazRPK0wzQXB1d2R6dFRtCkZ6V2RXUXVWZXA5S21VbWUzTjVpc3dFU0R3SVRXUXNxTys2OUlWa0hyN1hFMEJZeHRNMXhIYVRKZVVJZDFTazgKZUZJYitCTDVOOG8zYmJKMXJJL2hFQnppS2tXZE8xaVFtZTI3T2RPZTA5TGdRRXBvS2tzeldOSzVvcWQrOElGWQpaUWdxalhvRDBsVDVWeHBVS2l0YVlPclBRUXoxNXVGMStXV1dCNThiQ3FsdFF4WUVUSG0rZjVCWTcvWFJLS0tMCmV3blBMUE5BSnpXcWhRZ0p1cHZyMjFOYUxlSEhLaXQwS0dHODhtR0N0bnRZY2QrNlp2YzdTblBxSy9YRStGRk0KeWNjQ0F3RUFBYUFBTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFCWkdxYTRnWkU1Qlh6K2N0c0Y5RHc1WGJYYgpDOW9sTit6SXFrOVFXSXhNQldvcnlsRk96Rllhb2lMb1luWi9waU1tZWtBdTkyQTh1Z1N0b1hGRWRmNFQwNDdLClFsWlcvK0NvL0xrTHR6dlZ4UCtxaE94WFVFNlVIZlZJdXhoTlpKU0NSbkhDdFowOExlK1U1OElHTEo1Zm13YXkKSk53KzJkelljak8zSmM2TFoxKzI5b3l2WVB0VFEzUEFsaXJLMzBPcnE3ZzFKd3JOSVdOeGR6NmwvOEtsVk1HWgpXbDVsSDdubEYxdDg5cTBGNUc3c0FrSmE4OVpwZ1M4TnNFcGlOc0RhNUxpM1JxR3FGbXZ1S3dYQlAveG1pcVdyCmdUWUJuSGRyQ2VGVjFHNnlhTFpsSE9xS0xOa002SUd2MUJ0b3A3M1pDOFd3Q3dMSU9oRTNiN1JKZDJvQgotLS0tLUVORCBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0K

> signerName: kubernetes.io/kube-apiserver-client

> expirationSeconds: 86400 # one day

> usages:

> - client auth

> EOF

certificatesigningrequest.certificates.k8s.io/myuser created查看csr状态是为pending → 还未延签

root@master01:~/101/module6/basic-auth# k get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

myuser 3s kubernetes.io/kube-apiserver-client kubernetes-admin 24h Pending签发证书

kubectl certificate approve myuser获取crt

kubectl get csr myuser -o jsonpath='{.status.certificate}'| base64 -d > myuser.crt设置kubeconfig,新增myuser用户

kubectl config set-credentials myuser --client-key=myuser.key --client-certificate=myuser.crt --embed-certs=true查看kube config: cat ~/.kube/config

root@master01:~/101/module6/basic-auth# cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EWXhPVEExTXpjME5Wb1hEVE15TURZeE5qQTFNemMwTlZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTmRlCmdPc2FmanBmanFIbXR5VEFMenhQdU50VWRoMUNFc2dmcmRpM1QveGRpcjlUV3Vxbi9HZnAvdlJZZVpmQUJyT3kKcU5yMjF6dzJQZTBqVXFyc2pCVmMvSDdwL3d6d3psc0hqWVcxY0NIbDdwcFd3QUp5VWo0VWNvMFY3amxQTnNXTApkNEgxWjNHL3RkbE9ZMS9FQ2ZlVUxWWW4vb0g0eUNRazNzaTArckh6ejNOdDAvdWpKYStpWThpUmR5d05pUngvCk51RG96S2pvOHFSNWVtUjc1YUR5TnRsQ3d5M1ZvL0t2NFlWUUZSdGc4TENQT2VhZUlYN0ZyUmI2VUtWc29sL2EKNDN4bDFwSDhWbGJOMkNzcGIxMVFETmZIS1MyNXpvWmp1b29tTDd1MDg5a2ZONjN0dmdxMlVvam1hNGJYT0oxegowdVdkVUcvOUVVbmxNUjBVd3hzQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZQN0JmZ3libXJBbFhxLzl2bnJQSnB1YlVFTWdNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBTlZTeUpsUUUyZ3QveCtneklxeApVVitpVjBNTjVDZlBJQWwrVUxWMG5lWHMrMkJDVmNxVDVDK1UxY21EcVpRc1NpaXBwbUVPNDgwUUY5ZTk1YTVECkhPNG5KeStCT0VQWGl5TDhTMzkwTzZ6Z2JRVEpsN3ViaVVoN1QyeGtoL21SZ0dlSWwvbmxvaE1HTjNTQlJQMlIKeWVHOGtBby9CL2lON2dpM3RSQWk2dWt2aThBQzRJM01LR05GZVV5MlpTM1FNNWxIbFNqc3dMNzdvc20zVlB1RQpVWVVSV2FyWmpCc25mYU12YVh1SXFVSENaRzVsUTdNWGpmNkxBMXVUZytsNHFaN0hxanliR3R4RWVqRzFCaENXCnorUUJnOVJKUW16bTdwWS9uN0t5ZjJqcVB2bEVJNm9naVJjeTJoQVhnSnZWUm50NXpJK3pDYmhSeU9EenBFWXYKeEp3PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.56.2:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJVnowcmUyaUp2Ym93RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBMk1Ua3dOVE0zTkRWYUZ3MHlNekEyTVRrd05UTTNORGRhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXpITDFQZUVaaWdaWjA3OC8KOVRXYVMyTjFTWFZEOFpSR3liTnk3UGVDUmMxdWdGclNZS2c3RmczeG40UnluSHd0b0JyQjZmdVRNMFNjMGZUbwpqbUErblhaOEhNVHdhK1JjMVJ5UENTcTlNR3hKbGhWbnVxMlNwN0pMbUpnNVN2NjM3Wm95OWtLS2R3OEIxWkwxClJVWTF0aTdRREJVK0hndHZqUGt2NEtRNVZvYklxWWE3SmkwODJydjhTNmduTEFPV1ZtNkd0b0liNW1WcUExQjAKNXcxZEJHTklqTzQ1WE15WUtVWW9Eb0ErTGovS3p3b3MwT0JjYmtYbjY5RjM2dXZ1aEZaRUg3K1RKRFYxZXM2dwpqbU40MDNqVlpLZ0hPTU4rWlFpWU9DZmRPMEJ5dktMSlpMeVNBKzQwWC9vb0Vqb0s3b1ZqbndFeVlRSU5VajJDCjRpb2JOd0lEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JUK3dYNE1tNXF3SlY2di9iNTZ6eWFibTFCRApJREFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBeEYyc3BZaXRVZFkwalg3OVdVMC9xYksyQVc1bk02Z2ZQUFV3ClE0dTVKS2x2TXlVNExZRFNHWmVLS3Y3eTFOa0FTTkhQem82Wkw5U1dpL3lhaGNMR20wbXFiblFFVW4rL3hvbzUKMm4rUVVpS1UzL2RiVjdpNXlBUXJoOElDUnlRa0R0ZlRjdUZ5VUJ1MkRUNHg3bXJoZTFtekgwZzRLQjhIRm44SQorcHovcWY4bk9QV0FET2V1elQ2dUloZkh4cFlrRGw4Qm82L1hSYVp4RFdzQVZLM081ZUxIVHZacVF5dEFLbDdBCk5VYmZ3V3JnWVpYVmhKQjZaYlJidFhSbjdXTE5VSUNTYi9rZ3JLZjc0aVhwYlJCREtpUTc0SFpNeFMrL1Fkb0sKMXo2YTRiOFpnbWg1ckpPVms1RWtMUEFPSXNnZ00rVW5SK2FqZGgxN1VGakhDMmlJT1E9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBekhMMVBlRVppZ1paMDc4LzlUV2FTMk4xU1hWRDhaUkd5Yk55N1BlQ1JjMXVnRnJTCllLZzdGZzN4bjRSeW5Id3RvQnJCNmZ1VE0wU2MwZlRvam1BK25YWjhITVR3YStSYzFSeVBDU3E5TUd4SmxoVm4KdXEyU3A3SkxtSmc1U3Y2Mzdab3k5a0tLZHc4QjFaTDFSVVkxdGk3UURCVStIZ3R2alBrdjRLUTVWb2JJcVlhNwpKaTA4MnJ2OFM2Z25MQU9XVm02R3RvSWI1bVZxQTFCMDV3MWRCR05Jak80NVhNeVlLVVlvRG9BK0xqL0t6d29zCjBPQmNia1huNjlGMzZ1dnVoRlpFSDcrVEpEVjFlczZ3am1ONDAzalZaS2dIT01OK1pRaVlPQ2ZkTzBCeXZLTEoKWkx5U0ErNDBYL29vRWpvSzdvVmpud0V5WVFJTlVqMkM0aW9iTndJREFRQUJBb0lCQVFDM1lySko0L3lGaXZiTgo4WEdNSUoyYTI4YWJzbnpVVjcwN05TUjBHL3NWWTVTbnUwK1RkYk15TUNXNGdSUlEreTN0dTdLT2o2TlV6RW1pCkpuem5JTHRwZ0pzSkx6bThmV1VybjJkSndMVmNsdlZXa3pLdEJ2NVNQNktCYUtHVGZIRTh4aURLTlp0LytjMGEKWnF4c2kvS256TXUrMnRzU0ZnM2tOS3hXWXRndm5EQnZObDNnUHE5ZEhNUVlNMUh4eXYrOWpjQWRibGluMWZvSwpNOUhiWU95eWdnNTZJNkJHK3J4N2wxSjdSenVUMU4rYjEvVkdtbUsydnIrYytpWnRxeGFSdnM3Z1dWb0pFbDVSCnZ2VHNKWWRwSEVvVVBHQWYyTmsrRXdwMWs1aFc1YjlaT1UySytHMVNmTjZsd1ZWb2VLWXEwOCsvUnc5d0lTUUQKLzNGSnhHQkJBb0dCQU5CVXdkVkY5MTFIbTkza01kS05ISVJQQVBtNzZQajVDdGdoV2ZTQldrL0ZGdmQ4TWlVdwoyQnUyR1ZHRXlBMVptcU1zRGRSbjV5OHQzZjE4dHI4TmRvRmJEYUcyVm1TcVN0KzhLVW5HNjJXMUdvVTZ2dDFTCitiNFIxZWNoemNKK2hKR3QzdnhSYWhHTG5SWitwSlYzRHdYY2R2ZHBCY3NoR3dtMy9DNmJqeUtYQW9HQkFQczYKem00cXZ1TXRVMndoTkI1bDRFWkh6YmRMVVZadFJscnNkRkFmYzhhQlVabGlzNFlvUm0zcnNsQmUvYTFwK3RKUwpiVnlwakc5SnVrY0xwUnJCRmFkSlJMclI3U1AvSWtYcE95TEUzT1ZURnUxMWJTTEtIaExxUkVDbXFzemJkaHZsCmJodDRhRURIMkNiQnpRVnViaTZSK0xUM2pUMW5MQVFkcHZQcVFnQmhBb0dBQmttb21BRkdtQUFqU2kwcSs4bmEKaEh2RjhjT2tJbStSempnamVPZTJqQlhNdmFkMzgvdG5hbDZ5b08wN0JId2gySzdwcy9GMjNzdXBtWTc4RFFRaApBWUo0Qk50MS9BL1B0clQ5SWdibzcrYnBaLy8vNXJvc1kzb3lWSW1HcGtvZlFpNVhQcEpPZXowVmZxcFAyVnNBCmp4SzZYSGxFL1g2QVRHakxLYlQwT3YwQ2dZQkpMQWVUN3I5S1M1bFFsUnNvLzJNakZTYkZqQnBVb2Q4ci9GS2sKUTRUay9DVllGM2RTUzhpM216NkVTaVo0cTdWeUxLL05uVlJaMVk5N3dkaUV3bGdjTVNyamZ1RWk2dHlBb0QycApFczJEdlgrZ0NlT1BqbTdUODRlTmpQMlNUUmxKWnJsN0pzYTJsMzVOUzRUN1gvNlhjY3lPYU11cVpySmJRSWV2CjR6cjJBUUtCZ0FOZ0R5N0VPMS9lRG56aWFCN1ZNQ2lXZHJ0VjkvSnVFMk1wdUZJS1hQbkgvMGMzeEpIUHp2VUMKdThjOHlOTSsvcGRvT3g1QjBtK0tXRWI4ZE9NWWxOcGFDTnZGSit3MGNXV3dkODR3YkVSM0w2TnIyWkwwZ1EvKwovYXlYZnVsUkYrNUQrYTkzb3ZiVmVRUWl3TTFzTTBkNmlmZG02NzUrZW9zc1BvcVI3bDZhCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

**- name: myuser**

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURURENDQWpTZ0F3SUJBZ0lSQU8xWU01VXlNS2V3d2xNU3EraVBvV013RFFZSktvWklodmNOQVFFTEJRQXcKRlRFVE1CRUdBMVVFQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBM01ESXdPREV3TkRSYUZ3MHlNakEzTURNdwpPREV3TkRSYU1HWXhDekFKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoVGFHRnVaMmhoYVRFUk1BOEdBMVVFCkJ4TUlVMmhoYm1kb1lXa3hEekFOQmdOVkJBb1RCbTE1ZFhObGNqRVBNQTBHQTFVRUN4TUdiWGwxYzJWeU1ROHcKRFFZRFZRUURFd1p0ZVhWelpYSXdnZ0VpTUEwR0NTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFERApvRGRTTUJDOCtkVXVDMmYvd3ZzTlZNcXh1THVzZ3dCaDltWHdGa1VWS293RHR6YXkreDFyZGhKYXhNUm5LV1l5CmhnSk9Edmk5d0tic0hjN1U1aGMxblZrTGxYcWZTcGxKbnR6ZVlyTUJFZzhDRTFrTEtqdnV2U0ZaQjYrMXhOQVcKTWJUTmNSMmt5WGxDSGRVcFBIaFNHL2dTK1RmS04yMnlkYXlQNFJBYzRpcEZuVHRZa0pudHV6blRudFBTNEVCSwphQ3BMTTFqU3VhS25mdkNCV0dVSUtvMTZBOUpVK1ZjYVZDb3JXbURxejBFTTllYmhkZmxsbGdlZkd3cXBiVU1XCkJFeDV2bitRV08vMTBTaWlpM3NKenl6elFDYzFxb1VJQ2JxYjY5dFRXaTNoeHlvcmRDaGh2UEpoZ3JaN1dISGYKdW1iM08wcHo2aXYxeFBoUlRNbkhBZ01CQUFHalJqQkVNQk1HQTFVZEpRUU1NQW9HQ0NzR0FRVUZCd01DTUF3RwpBMVVkRXdFQi93UUNNQUF3SHdZRFZSMGpCQmd3Rm9BVS9zRitESnVhc0NWZXIvMitlczhtbTV0UVF5QXdEUVlKCktvWklodmNOQVFFTEJRQURnZ0VCQUpha1AxUm9oK09BQ1Y1TEIxRjhJcUZVVFVRc3MyNnlucVlnMnAxQzNnek0KRStpNWxuZnhGSkVORGJyeWR2Q0dHak5TZWYwWGlESVZ5ZXIxRE95MlpWQ3Btd2xPUWVnYlI2NGs5WGVReUhPOApkMWZxUHVzZFU1dTNsWUNUdm8weDlRU0Z1a3loVG1yYVlhYWh6Q2dQcE0va2ExNkhHQVhpRzVUQXllVTl6TVpOCnhySmpSbGNOamZYODNrU1lUQVJydTJiRzVEdkVWRDlqK1phWU84a0VOZm1yd2ZhT3JiakVKQU15YUNYTzFidjUKVGJUTmNzcnlRZURuaGREYUxXbC9oOFJadDRiMTZRY0tmSEozKzNYbkVQeFBkSHZOSjdkcm5hYU1BN2VFOHBZbQppdnV1M3pKTzY1VXB6K3MrWVFKQy9LVzJKUEZzZEQ2ZWRiRzN2ZE1qeG9NPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBdzZBM1VqQVF2UG5WTGd0bi84TDdEVlRLc2JpN3JJTUFZZlpsOEJaRkZTcU1BN2MyCnN2c2RhM1lTV3NURVp5bG1Nb1lDVGc3NHZjQ203QjNPMU9ZWE5aMVpDNVY2bjBxWlNaN2MzbUt6QVJJUEFoTloKQ3lvNzdyMGhXUWV2dGNUUUZqRzB6WEVkcE1sNVFoM1ZLVHg0VWh2NEV2azN5amR0c25Xc2orRVFIT0lxUlowNwpXSkNaN2JzNTA1N1QwdUJBU21ncVN6Tlkwcm1pcDM3d2dWaGxDQ3FOZWdQU1ZQbFhHbFFxSzFwZzZzOUJEUFhtCjRYWDVaWllIbnhzS3FXMURGZ1JNZWI1L2tGanY5ZEVvb290N0NjOHM4MEFuTmFxRkNBbTZtK3ZiVTFvdDRjY3EKSzNRb1lienlZWUsyZTFoeDM3cG05enRLYytvcjljVDRVVXpKeHdJREFRQUJBb0lCQUdLZmswUk1GeVF1ajlyMgp4U2VjRlJWVGVoeS9GVjZUYk0zMmVzM2ZiRlNQYnFjdzV0SzA1dEFXWm9wOFNNZjVoeHhSa3pmbk5GLzFrREhaCmxUeWdBM04wTUVBMnkrc2lvTVVNNGl6N2RXTkV4MncwZE4rOEd4cnhIcTdUd1RIU1YxWFpHVjI1ZVVocWlrZGEKNHV3M0lESEZCL3dJeUtlWjZpUGVUM0Q1OWpXWXJnNGpLaUhmUjNOU1RWQTRRc2NLdm04eXNrZk10UEZ6bERGagpnZW9jcGJRLzY0VmtDWGZoZnZOVy8wM3FLVHFTb3dSNTc4NFpKQTZocXo0R2NDTStFTm9yRDZyaVhtSDlpNUhlCkFUZGJVeUg0d1A3WVZxL1Rodnd6OEc3UlRDdFFlTVBjRW16MVlqSlBLNEZMdTUrc0NxUGl4cUlQSmg2TFVpVHUKZXJIZmM0RUNnWUVBNTlkSU9vOXF6UWRyZVNwSHZ5ejdKbXZTb2FnVW5XVFFoZ3FOL0ZTRVB0VHp2WGJmcFEvRQpGbVBxK1BnZjBkQmlaajVaaWZwbDhsL0VJbDZPTkJtNzMwaWxLdlpwdnRJRS9uaktaTmVEVkh3cWxxQVJKSzJCClNZR1VUN0xGclJIV0doR2VaTmsxYWkzOHBqSFNIb2tOVEM2bG9JUTJGMEUrYytka2RTbzNPQk1DZ1lFQTJBTFcKL0FrcTcxZnExZGRLU2RaRFJzczhZeDNiNytJaEdsZlVhamJCN1VwYVpOdzN4ZWtEUFZQdWtIN20wc0V5dXlPdApyNGRwWk03NitqU2l5NU9zaGZSVWhHVlFkMUdqRGtFVEE1aTU5N2FWejhuZ0h4c2NpT0tid0M1dmZJSUJ3SmhWCnVUbmk0eFg4ei9hN0o2b1ZRNUc4ak9PcVJCTnJQNjJzd2VFV0JmMENnWUVBNHdYVnJicG9tOUxMTS8vcDk5T2wKTDQ5ZjEzck9qUDErai9OZjdCb2EwYWdYOFl5cEhXb2Qyc3NHK2J1RzlSNzRiQ1JiNjVmdUluUVNqSkZJOE0zTwpRYXhTU0lxNUsrbGVpSTFocTNPNkg0M2k2bEpkMXl1cnNYNFk3QjRrSWdDWVJqakFnUUtOb3FiYmd5YkFHYlNjCjk5K3B3bEFVNDVxNC9DZzNIK0F4NkU4Q2dZRUF3TytJWmdVcVBDMGlxMjFvQlJ0RGEvQUxOOXhyblk4MFVmc3cKMXMyaDJQZ1lWUTM5SXVCRTdIb1RFeXpGcm1peGowVUcxVWoxY2YwTlhuMjFDbkFVSUhGaksvM3lLU0RacXRtawp5Z21YMFJGY0xnUDZFczU4WmlkQlJoNHpwZG5aQnRVSmZDK2YzNFM4RW1RbU5mOU1qdFdVdTZKOWFlQ09Zb29WClMvWm5YV0VDZ1lFQXE5WWE4S1l3WlFONzJFRE9VV1pmSFhEdUtTZjRoTUZ1dEtCbEExMGs3N0FDUWFYdFcyZHQKM3JZUldGdHhRdnlMZDgvRmJxaGRSVm1YMmIyM2FWUlBMRmZIMDBGTURFVTJzdDB5ZEc4b1BDdnNyV3VNellpUgoyU1R4V0ZCQldtY0t5MDY1MlppRk5SdkxKZ0JBcVlvMUxQUFAzVUZBdmsxVFdldlZhZUd5dmpBPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=使用myuser来访问kube集群

root@master01:~/101/module6/basic-auth# k get pod --user myuser

Error from server (Forbidden): pods is forbidden: User "myuser" cannot list resource "pods" in API group "" in the namespace "default"授权绑定 #

RBAC #

root@master01:~/101/module6/rbac# cat cluster-admin-to-myuser.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: default-admin

namespace: default

subjects:

- kind: User

name: myuser # 授权的用户

apiGroup: rbac.authorization.k8s.io

# - kind: ServiceAccount

# name: default

# namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io查看cluster role: cluster-admin 拥有所有的操作资源权限

root@master01:~/101/module6/rbac# k get clusterrole cluster-admin -oyaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2022-06-19T05:37:59Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: cluster-admin

resourceVersion: "76"

uid: dc47523c-c98a-42c8-ba14-0ea2a073f567

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'建立rolebinding

k apply -f cluster-admin-to-myuser.yaml便能通过该用户来获取到cluster-admin的权限

root@master01:~/101/module6/rbac# k get pod --user myuser

NAME READY STATUS RESTARTS AGE

envoy-f4dcfcf47-4mc6l 1/1 Running 0 2d17h基于webhook的认证服务集成 #

生成webhook-config-file

{

"kind": "Config",

"apiVersion": "v1",

"preferences": {

},

"clusters": [

{

"name": "github-authn",

"cluster": {

"server": "http://localhost:3000/authenticate"

}

}

],

"users": [

{

"name": "authn-apiserver",

"user": {

"token": "secret"

}

}

],

"contexts": [

{

"name": "webhook",

"context": {

"cluster": "github-authn",

"user": "authn-apiserver"

}

}

],

"current-context": "webhook"

}修改kube-config,最后添加一个新的用户vim ~/.kube/config

- name: newUser

user:

token: userTokenkubectl get po --user newUser

修改apiserver设置,开启认证服务

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.34.2:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.34.2

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --authentication-token-webhook-config-file=/etc/config/webhook-config.json

image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.34.2

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 192.168.34.2

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 192.168.34.2

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/ca-certificates

name: etc-ca-certificates

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /usr/local/share/ca-certificates

name: usr-local-share-ca-certificates

readOnly: true

- mountPath: /usr/share/ca-certificates

name: usr-share-ca-certificates

readOnly: true

- name: webhook-config

mountPath: /etc/config

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/ca-certificates

type: DirectoryOrCreate

name: etc-ca-certificates

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /usr/local/share/ca-certificates

type: DirectoryOrCreate

name: usr-local-share-ca-certificates

- hostPath:

path: /usr/share/ca-certificates

type: DirectoryOrCreate

name: usr-share-ca-certificates

- hostPath:

path: /etc/config

type: DirectoryOrCreate

name: webhook-config

status: {}授权机制 #

RBAC vs ABAC #

ABAC:类似于静态密码,k8s中较难管理,要使得对授权的变更生效,还需要重启api server

RBAC:可以利用kubectl或者k8s api直接进行配置,RBAC可以授权给用户,让用户有权进行授权管理,这样就可以无需接触节点,直接记性授权管理。

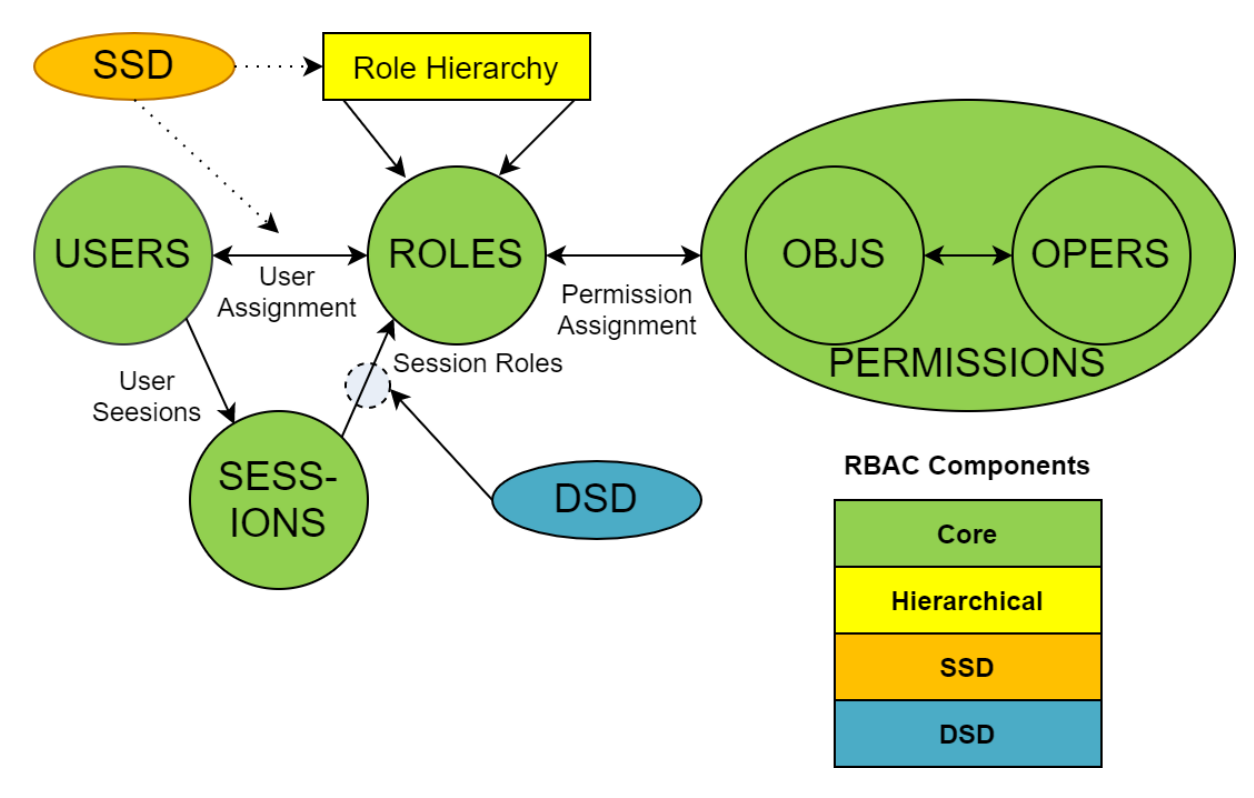

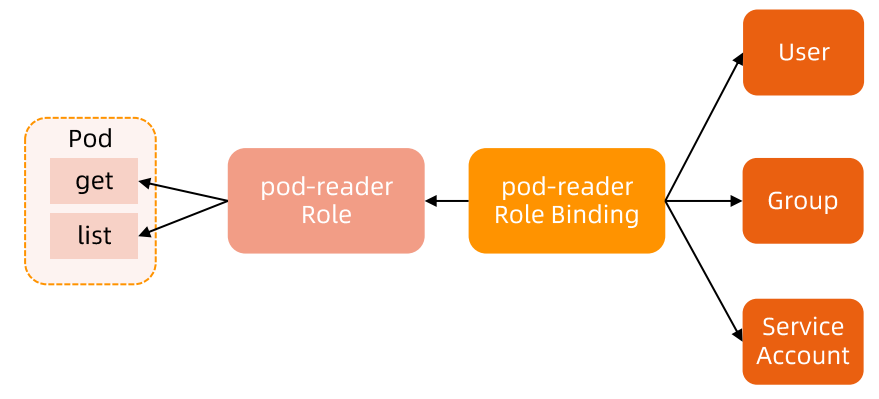

RBAC #

rbac中的权限可以传递,如果一个人被admin授予了add权限,那么他可以继续将这个add权限授权给其他用户,整个权限可以分级运营

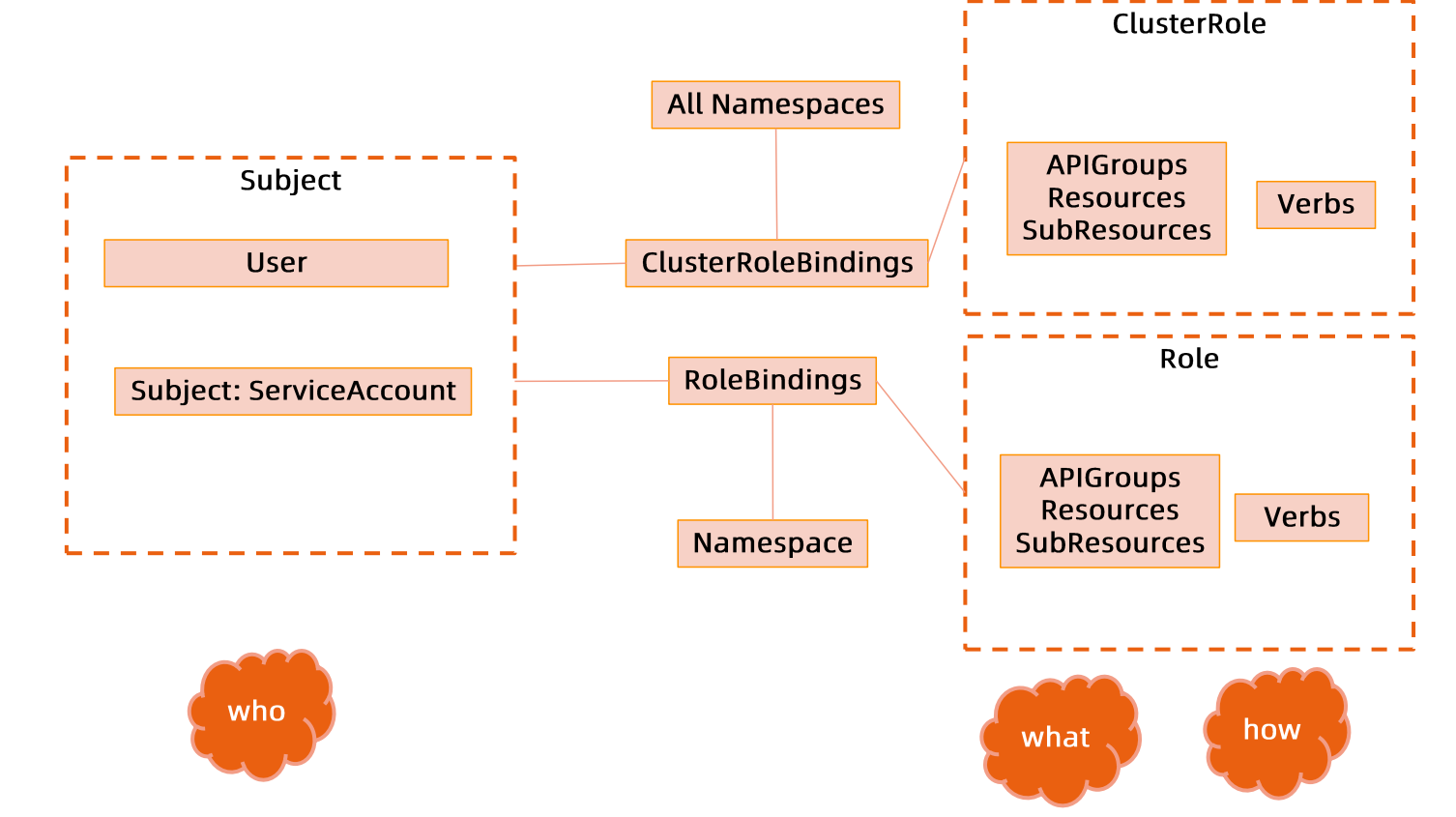

k8s中RBAC的落地

clusterRoleBinding和roleBinding的区别在于作用于范围不一样 role和roleBinding作用域范围为namespace

clusterRole和clusterRoleBinding作用域范围为集群

role与clusterRole #

role:一系列权限的集合,例如一个角色可以包含读取pod的权限和列出pod的权限,role只能用来给某个特定的namespace中的资源作鉴权

# role实例

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: [''] # "" indicates the core api group

resources: [pods]

verbs: [get, watch, list]clusterRole:对多namespace和集群级别的资源或者是非资源类的API(如/health)

# clusterRole实例

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

# "namespace" omitted since ClusterRoles are not namespaced

name: secret-reader

rules:

- apiGroups: [''] # "" indicates the core api group

resources: [secrets]

verbs: [get, watch, list]binding #

针对用户的授权 #

# RoleBinding 实例(引用ClusterRole)

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: development

name: read-secrets

subjects:

- kind: User

name: dave

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: secret-reader

apiGroup: rbac.authorization.k8s.io针对group的授权 #

# 针对外部user

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-secrets-global

subjects:

- kind: Group

name: manager # 'name'区分大小写

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: secret-reader

apiGroup: rbac.authorization.k8s.io# 针对内部k8s账号 serviceAccount

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-secrets-global

subjects:

- kind: Group

name: system:serviceaccounts:qa # namespace

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: secret-reader

apiGroup: rbac.authorization.k8s.io最佳实践 #

- ClusterRole是非namespace相关的,针对整个集群生效,给权限需要慎重

- ClusterRole一般只给到集群管理员,针对不同的用户通过namespace相关的roleBinding去授予

- CRD是全局资源,普通用户创建CRD后,需要管理员授予相应权限后才能真正操作对象

- 针对所有的角色,建议创建spec通过源代码驱动,而非通过edit来修改权限,后期可能会导致权限管理混乱

- 权限可以传递

- 防止海量的角色和角色绑定对象,大量的对象会导致鉴权效率降低,同时给apiserver增加负担

- SSH到master节点通过insecure port访问apiserver可绕过鉴权,当需要做管理又没有权限时可以使用

准入控制 #

目的:

- 增加自定义属性

- 变形或者不变形后,校验对象是否合法

常用场景:配额管理(资源有限,如何限定某个用户有多少资源?)

方案:

- 预定义每个namespace的ResourceQuota,并把spec保存为configmap

- 创建ResourceQuota Controller:监控namespace创建事件,当namespace创建时,在该namespace创建对应的ResourceQuota对象

- apiserver中开启ResourceQuota的admission plugin

ResourceQuota配额管理 #

查看当前的configmap数量

root@master01:~/101/module6/quota# k get cm

NAME DATA AGE

envoy-config 1 12d

kube-root-ca.crt 1 13d新建一个测试的configmap:envoy-config-test

root@master01:~/101/module6/quota# k get envoy-config -oy yaml > test.yaml

error: the server doesn't have a resource type "envoy-config"

root@master01:~/101/module6/quota# k get cm envoy-config -oyaml > test.yaml

root@master01:~/101/module6/quota# vim test.yaml

root@master01:~/101/module6/quota# k create -f test.yaml

configmap/envoy-config-test created

root@master01:~/101/module6/quota# k get cm

NAME DATA AGE

envoy-config 1 12d

envoy-config-test 1 4s

kube-root-ca.crt 1 13d删除它

root@master01:~/101/module6/quota# k delete cm envoy-config-test上述新增都可以正常操作

新建配额

root@master01:~/101/module6/quota# k create -f quota.yaml

resourcequota/object-counts created

root@master01:~/101/module6/quota# k get resourcequota

NAME AGE REQUEST LIMIT

object-counts 4s configmaps: 2/1

root@master01:~/101/module6/quota# k get resourcequota -oyaml

apiVersion: v1

items:

- apiVersion: v1

kind: ResourceQuota

metadata:

creationTimestamp: "2022-07-02T09:36:52Z"

name: object-counts

namespace: default

resourceVersion: "246870"

uid: af2b6a4a-a9d4-4a0b-a8b6-f52f0fe630b1

spec:

hard:

configmaps: "1"

status:

hard:

configmaps: "1"

used:

configmaps: "2"

kind: List

metadata:

resourceVersion: ""这时候再去新增便无法新增,因为当前的cm数量已经使用了2个,硬限制只能有一个cm

root@master01:~/101/module6/quota# k create -f test.yaml

Error from server (Forbidden): error when creating "test.yaml": configmaps "envoy-config-test" is forbidden: exceeded quota: object-counts, requested: configmaps=1, used: configmaps=2, limited: configmaps=1准入控制插件 #

- AlwaysAdmit:接受所有请求

- AlwaysPullImages:总是拉取最新的镜像,在多租户场景下非常有用

- DenyEscalatingExec:禁止特权容器的exec和attach操作

- ImagePolicyWebhook:通过webhook决定image策略,需要同时配置

—admission-control-config-file,一般用来做容器镜像扫描 - ServiceAccount:自动创建默认的ServiceAccount,并确保Pod引用的ServiceAccount已经存在

- SecurityContextDeny:拒绝包含非法SecurityContext配置的容器

- ResourceQuota:限制pod的请求不会超过配额,需要在namespace中创建一个ResourceQuota对象。

- LimitRanger:为pod设置默认资源请求和限制,需要在namespace中创建一个LimitRanger对象。

- InitialResources:根据镜像的历史使用记录,为容器设置默认的资源请求和限制

- NamespaceLifecycle:确保处于termination状态的namespace不再接收新的对象创建请求,并拒绝请求不存在的namespace

- DefaultStorageClass:为PVC设置默认的StorageClass

- DefaultTolerationSeconds:设置Pod的默认forgiveness toleration为5分钟

- PodSecurityPolicy:使用Pod Security Policies时必须开启

- NodeRestriction:限制kubelet仅可访问node、endpoint、pod、service以及secret、configmap、PV和PVC等相关的资源

查看apiserver准入控制插件

kubectl exec -it kube-apiserver-node -- kube-apiserver -h

--disable-admission-plugins 禁止哪些准入控制插件

--enable-admission-plugins 允许哪些准入控制插件自定义admission plugin #

配置一个mutationWebhookConfiguration

# {{if eq .k8snode validating "enabled"}}

apiVersion: admissionregistration.k8s.io/y1betal

kind: MutatingWebhookConfiguration

metadata:

name: ns-mutating.webhook.k8s.io

webhooks:

- name: my-webhook.example.com

clientConfig:

caBundle: {{.serverca_base64}} # # ca cert

url: 'https://my-webhook.example.com:9443/my-webhook-path'

failurePolicy: Fail

namespaceSelector: {}

rules:

- apiGroups:

- ''

apiversions:

- '*'

operations:

- CREATE

resources:

- nodes

sideEffects: Unknown

# {{end}}demo #

GitHub - cncamp/admission-controller-webhook-demo: Kubernetes admission controller webhook example

部署webhook-demo

$ kubectl -n webhook-demo get pods

NAME READY STATUS RESTARTS AGE

webhook-server-6f976f7bf-hssc9 1/1 Running 0 35m部署MutatingWebhookConfiguration

$ kubectl get mutatingwebhookconfigurations

NAME AGE

demo-webhook 36m部署没有设置runAsNonRoot和runAsUser属性的pod

$ kubectl create -f examples/pod-with-defaults.yaml验证pod的security context中已经含有了默认值

$ kubectl get pod/pod-with-defaults -o yaml

...

securityContext:

runAsNonRoot: true

runAsUser: 1234

...同时检查pod的日志

$ kubectl logs pod-with-defaults

I am running as user 1234部署一个将runAsRoot显示设置为false的pod,允许他作为root用户去运行

$ kubectl create -f examples/pod-with-override.yaml

$ kubectl get pod/pod-with-override -o yaml

...

securityContext:

runAsNonRoot: false

...

$ kubectl logs pod-with-override

I am running as user 0尝试部署具有冲突设置的pod:runAsNonRoot设置为true,但runAsUser设置为0(root)。准入控制器会阻止这个pod的创建。

$ kubectl create -f examples/pod-with-conflict.yaml

Error from server (InternalError): error when creating "examples/pod-with-conflict.yaml": Internal error

occurred: admission webhook "webhook-server.webhook-demo.svc" denied the request: runAsNonRoot specified,

but runAsUser set to 0 (the root user)限流 #

理论方法 #

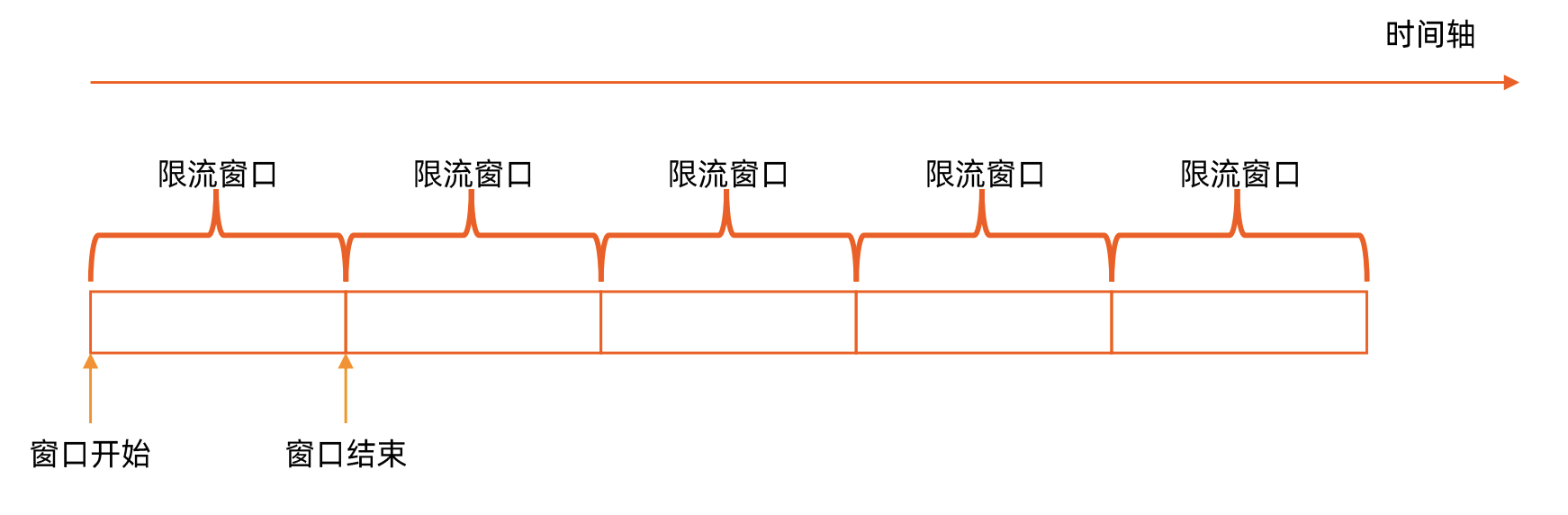

- 计数器固定窗口算法

Note

对一段固定时间窗口内的请求进行计数,如果请求数超过了阈值,则舍弃该请求;

如果没有达到设定的阈值,则接受该请求,并计数+1

当时间窗口结束时,重置计数器为0

Caution

问题:窗口期切换可能导致的超额请求

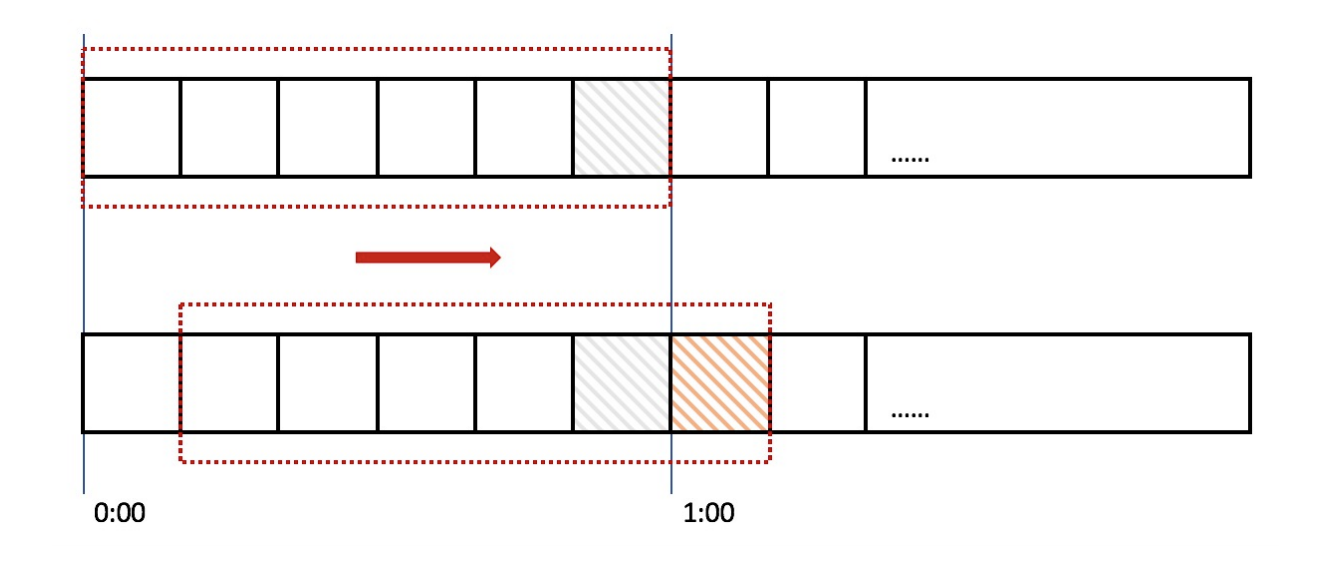

- 计数器滑动窗口法

Note

在固定窗口的基础上,将一个计时窗口分成了若干个小窗口,然后每个小窗口维护一个独立的计时器

当请求的时间大于当前窗口的最大时间时,则将计时窗口向前平移一个小窗口 平移时,将第一个小窗口的数据丢弃,然后将第二个向窗口设置为第一个小窗口,同时在最后面新增一个小窗口,将新的请求放在新增的小窗口中 同时要保证整个窗口的所有小窗口的请求数目之和不超过设定的阈值

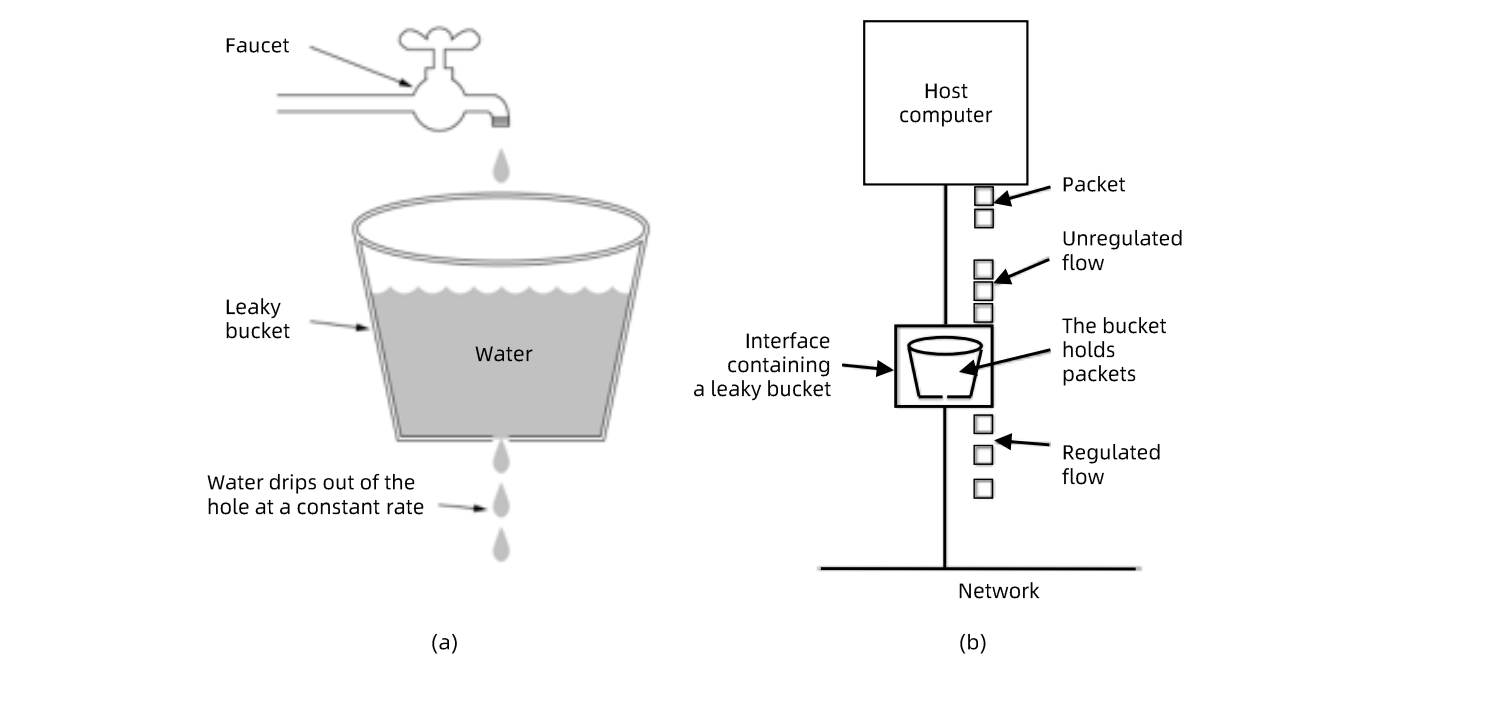

- 漏斗算法

Note

请求来了后会首先进到漏斗里,然后漏斗以恒定的速率将请求流出进行处理,从而起到平滑流量的作用

当请求的流量过大时,漏斗达到最大容量时会溢出,此时请求被丢弃

在系统看来,请求永远是以平滑的传输速率过来,从而起到了保护系统的作用

- 令牌桶算法

Note

对漏斗算法进行了一定的改进,除了能起到限流的作用外,还允许一定程度的流量突发

在令牌桶算法中,存在一个令牌桶,算法中存在一种机制以恒定的速率向令牌桶中放入令牌,令牌桶中存在容量,如果满了,就无法再继续放入

当请求过来时,会首先到令牌桶中去拿令牌,如果拿到了令牌,则该请求会被处理,并消耗拿到的令牌。否则被丢弃

ApiServer中的限流 #

源码:staging/src/k8s.io/apiserver/pkg/server/filters/maxflight.go:WithMaxInFlightLimit()

控制参数:

- max-requests-inflight: 在给定时间内的最大请求数

- max-mutating-requests-inflight: 在给定时间内的最大mutating请求数,调整apiserver的流控qos

| 默认值 | 节点数1000-3000 | 节点数>3000 | |

|---|---|---|---|

| max-requests-inflight | 400 | 1500 | 3000 |

| max-mutating-requests-inflight | 200 | 500 | 1000 |

传统限流方法的局限性

- 粒度粗:无法为不同用户、不同场景设置不同的限流

- 单队列:共享限流窗口/桶,一个坏用户可能会导致整个队列的阻塞,其他正常用户的请求无法被及时的处理

- 不公平:正常用户的请求会被排到队尾,无法及时处理而饿死

- 无优先级:重要的系统指令一并被限流,系统故障难以恢复

API Priority and Fairness(APF) #

- APF以更细粒度的方式对请求进行分类和隔离

- 引入了空间有限的排队机制,因此在非常短的突发流量情况下,API服务器不会拒绝任何请求

- 通过使用公平排队技术从队列中分发请求,这样一个行为不佳的控制器不会饿死其他控制器(即使优先级相同)

- APF核心:多等级、多队列

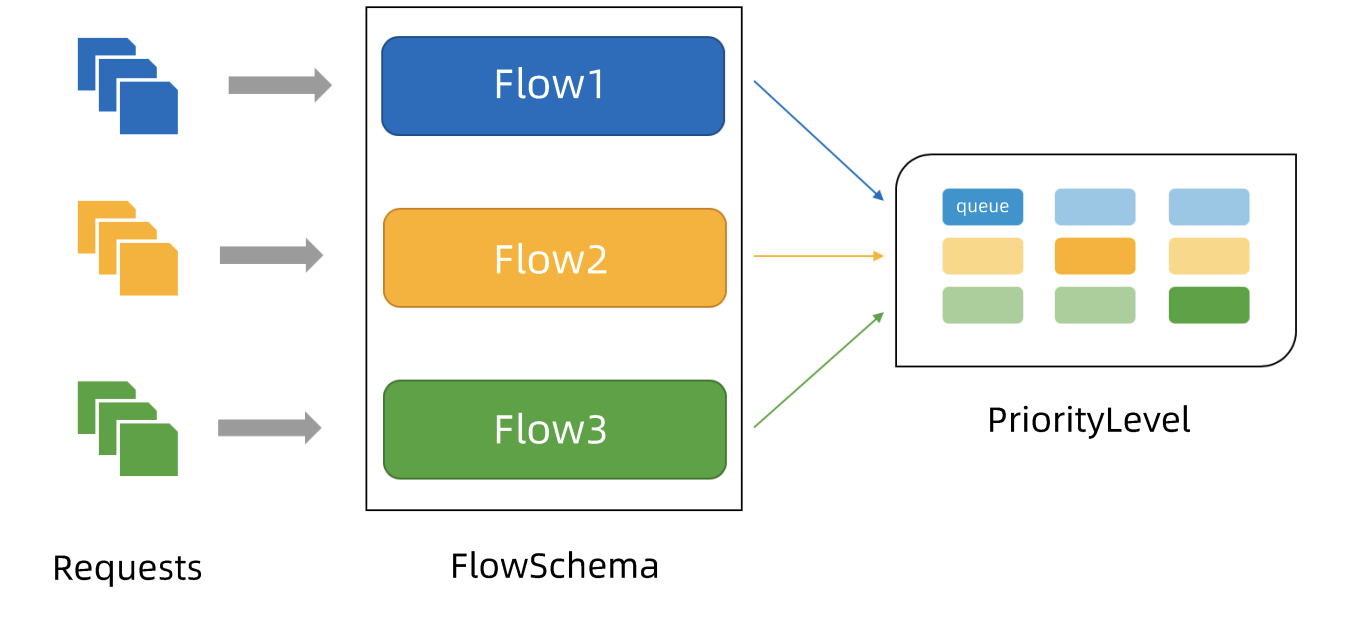

APF 实现依赖的两个重要资源

- Flow Schema: 用来定义不同的流,每个请求分类对应一个FlowSchema,FS内的请求又会根据distinguisher进一步划分不同的flow

- PriorityLevelConfiguration: 用来定义FS的优先级,不同优先级的并发资源是隔离的。所以不同优先级的资源不会互相排挤。特定优先级的请求可以被高优处理

对应关系:一个FS可以指定一个PL,一个PL可以对应多个FS

PL中维护了一个QueueSet, 用于缓存不能及时处理的请求,请求不会因为超出PL的并发限制而被丢弃。

FS中的每个Flow通过shuffle sharding算法从QueueSet中获取请求,然后进行处理。 每次从QueueSet中取请求执行时,会先引用fair queuing算法从QueueSet中选中一个queue,然后从这个queue中取出oldest请求执行,保证每个Flow都能被公平的处理。

# MATCHINGPRECEDENCE数字越小的越优先匹配

❯ kubectl get flowschema

NAME PRIORITYLEVEL MATCHINGPRECEDENCE DISTINGUISHERMETHOD AGE MISSINGPL

exempt exempt 1 <none> 16d False

probes exempt 2 <none> 16d False

system-leader-election leader-election 100 ByUser 16d False

endpoint-controller workload-high 150 ByUser 16d False

workload-leader-election leader-election 200 ByUser 16d False

system-node-high node-high 400 ByUser 16d False

system-nodes system 500 ByUser 16d False

kube-controller-manager workload-high 800 ByNamespace 16d False

kube-scheduler workload-high 800 ByNamespace 16d False

kube-system-service-accounts workload-high 900 ByNamespace 16d False

service-accounts workload-low 9000 ByUser 16d False

global-default global-default 9900 ByUser 16d False

catch-all catch-all 10000 ByUser 16d False概念

- 传入的请求通过FlowSchema按照属性分类,并分配优先级

- 每个优先级维护自己的并发限制,加强了隔离度,这样不同优先级的请求,不会互相饿死

- 在同一个优先级内,公平排队算法可以防止来自不同flow的请求互相饿死

- 该算法将请求排队,通过排队机制,防止在平均负载较低时,通信量突增而导致请求失败

优先级

- 未启用APF,API服务的总体并发量将受到

--max-requests-inflight和--max-mutating-requests-inflight的限制 - 启用APF后,将对这些参数定义的并发限制进行求和,然后将总和分配到一组可配置的优先级中,每个传入的请求都会分配一个优先级

- 每个优先级都有各自的配置,设定允许分发的并发请求数

- 例如,默认配置包含针对leader的选举、内置控制请求和Pod请求都单独设置了优先级。所以即使异常的Pod向API服务器发送了大量请求,也无法阻止领导者选举或者内置控制器的操作执行成功

排队

- 按照优先级匹配看落到了哪个FlowSchema

- 根据FlowSchema对应的PriorityLevel决定限流策略

- 根据Distinguisher method来判断请求根据按照user还是namespace来区分

- 每个请求过来后,都会被分配到某个流中,该流由对应的FlowSchema的名字加上一个流区分项(Flow Distinguisher)来表示

- 将请求划分到流中后,APF功能将请求分配到队列中

- 分配时使用

shuffle-Sharding技术(比如限制一个flow最终进其中的4个队列)。将技术可以相对有效地利用队列隔离低强度流和高强度流

豁免请求 #

某些特别重要的请求不受制于此特性施加的任何限制

NAME PRIORITYLEVEL MATCHINGPRECEDENCE DISTINGUISHERMETHOD AGE MISSINGPL

exempt exempt 1 <none> 16d False

probes exempt 2 <none> 16d False默认配置(k8s ver: 1.22)

- exempt: 不限流,例如探活

- system: 用于system:nodes组(即kubelets)的请求;kubeletes必须能连上API服务器,以便工作负载能够调度到其上

- leader-election: 用于内置控制的领导选举的请求(特别时来自内部kube-system命名空间中system:kube-controller-manager和system:kube-scheduler用户和服务账号,针对endpoints、configmaps或leases的请求)

- workload-high: 用于内置控制器的请求

- workload-low: 适用于来自任何服务账户的请求,通常包括来自Pods中运行的控制器的所有请求

- global-default: 可处理所有其他流量,例如: 非特权用户运行的交互式kubectl命令

- catch-all: 兜底

PriorityLevelConfiguration #

一个PriorityLevelConfiguration表示单个隔离类型,对未完成的请求数有各自的限制,对排队中的请求数也有自己的限制

apiversion: flowcontrol.apiserver.k8s.io/vlbetal

kind: PriorityLevelConfiguration

metadata:

name: global-default

spec:

limited:

assuredConcurrencyShares: 20 # 允许的并发请求

limitResponse:

queuing:

handSize: 6 # shuffle sharding的配置,每个flowSchema + distinguisher的请求会被enqueue到多少个队列

queueLengthLimit: 50 # 每个队列中的对象数量

queues: 128 # 当前PriorityLevel的队列总数

type: Queue

type: LimitedFlowSchema #

FlowSchema 匹配一些入站请求,并将他们分配给优先级

apiVersion: flowcontrol.apiserver.k8s.io/v1beta3

kind: FlowSchema

metadata:

name: system-leader-election # FlowSchema名

spec:

distinguisherMethod:

type: ByUser # Distinguisher

matchingPrecedence: 800 # 规则优先级

priorityLevelConfiguration: # 对应的优先级队列

name: leader-election

rules:

- resourceRules:

- apiGroups:

- coordination.k8s.io

namespaces:

- '*'

resources:

- leases

verbs:

- get

- create

- update

subjects:

- kind: User

user:

name: system:kube-controller-manager

- kind: User

user:

name: system:kube-scheduler调试 #

可以通过kubectl get --raw uri来访问具体的uri

- /debug/api_priority_and_fairness/dump_priority_levels – 所有优先级及其当前状态

- /debug/api_priority_and_fairness/dump_queues – 所有队列及其当前状态的列表

- /debug/api_priority_and_fairness/dump_requests – 当前正在队列中等待的所有请求

高可用Api Server #

最佳实践 #

- 构建高可用副本的多副本apiserver

apiserver无状态,所以方便scale up / down 负载均衡

- 在多个apiserver实例上,配置负载均衡

- 证书需要加上LoadbalancerVIP重新生成

- 预留充足的CPU、内存资源

随着集群中节点数量不断增多,API Server 对 CPU 和内存的开销也不断增大。过少的 CPU 资源 会降低其处理效率,过少的內存资源会导致 Pod 被 OOMKilled,直接导致服务不可用。在规划 API Server 资源时,不能仅看当下需求,也要为未来预留充分。

- 善用速率限制(RateLimit)

两个重要参数

--max-requests-inflight: 给定时间支持并行处理度请求(Get、List、Watch)的最大数量--max-mutating-requests-inflight: 给定时间支持并行处理的写请求(Create、Delete、Update和Patch)的最大数量

当 APIServer 接收到的请求超过这两个参数设定的值时,再接收到的请求将会被直接拒绝。通过 速率限制机制,可以有效地控制 APIServer 内存的使用。

如果该值配置过低,会经常出现请求超过限制的错误,如果配置过高,则 APIServer 可能会因为 占用过多内存而被强制终止,因此需要根据实际的运行环境,结合实时用户请求数量和 APIServer 的资源配置进行调优。

客户端重试应遵循指数退避的原则

针对并行处理请求数的过滤颗粒度太大,在请求数量比较多的场景,重要的消息可能会被拒绝掉, 自1.18版本开始,社区引入了优先级和公平保证(Priority and Fairness)功能,以提供更细粒 度地客户端请求控制。

该功能支持将不同用户或不同类型的请求进行优先级归类,保证高优先级的请求总是能够更快得 到处理,从而不受低优先级请求的影响。

- 设置合适的缓存大小

API Server与etcd之间基于 gRPC 协议进行通信,gRPC 协议保证了二者在大规模集群中的数据 高速传输。gRPC 基于连接复用的 HTTP/2协议,即针对相同分组的对象,API Server 和 etcd 之 间共享相同的TCP 连接,不同请求由不同的 stream 传输。

一个 HTTP/2 连接有其 stream 配额,配额的大小限制了能支持的并发请求。

API Server提供了集群对象的缓存机制,当客户端发起查询请求时,APl Server默认会将其缓存 直接返回给客户端。缓存区大小可以通过参数--watch-cache-sizes设置。

针对访问请求比较多的对象,适当设置缓存的大小,极大降低对 etcd 的访问频率,节省了网络调 用,降低了对 etcd 集群的读写压力,从而提高对象访问的性能。

⚠️ 但是 API Server 也是允许客户端忽略缓存的,例如客户端请求中 ListOption 中没有设置 resourceVersion,这时 API Server 直接从 etcd 拉取最新数据返回给客户端。

客户端应尽量避免此操作,应在 ListOption 中设置 resourceVersion 为 0,API Server 则将从缓 存里面读取数据,而不会直接访问 etcd。

- 客户端尽量使用长链接

当查询请求的返回数据较大且此类请求并发量较大时,容易引发TCP 链路的阻塞,导致其他查询 操作超时。

因此基于 Kubernetes 开发组件时,例如某些 Daemon Set 和 Controller,如果要查询某类对象, 应尽量通过长连接 ListWatch 监听对象变更,避免全量从 API Server 获取资源。

如果在同一应用程序中,如果有多个 Informer 监听 API Server 资源变化,可以将这些 Informer 合并,减少和 API Server 的长连接数,从而降低对 API Server 的压力。

如何访问 #

| client type | 外部LB VIP | service Cluster Ip(kube-proxy) | apiserver IP |

|---|---|---|---|

| Internal | Y | Y | Y |

| External | Y | N | N |

- 外部客户(user/client/admin)永远只通过LB访问

- 控制面组建用同一个访问入口,防止部分能访问通,部分不通,可能出现状态汇报不上,但是控制器活着,就会开始驱逐pod

多租户集群 #

授信

- 认证: 禁止匿名访问,只允许可信用户做操作

- 授权: 基于授信的操作,防止多用户之间互相影响,比如普通用户删除k8s核心服务,或者A用户删除或者修改B用户的应用

隔离

- 可见行隔离:用户只关心自己的应用,无需看到其他用户的服务和部署。

- 资源隔离:有些关键项目对资源需求较高,需要专有设备,不与其他人共享。

- 应用访问隔离:用户创建的服务,按既定规则允许其他用户访问。

资源隔离

- Quota管理:谁能用多少资源?(ResourceQuota)

apimachinery组件 #

- External version: k8s kind对外暴露的版本,例如v1alpha、v1beta1等

- Internal Version: k8s 存入etcd前将外部传入的对象转换成内部版本

如何定义一个group #

pkg/apis/core/register.go

// 定义groupversion

var SchemeGroupVersion = schema.GroupVersion{Group: GroupName, Version: runtime.APlVersionlnternal}

// 定义 SchemeBuilder

var(

SchemeBuilder = runtime.NewSchemeBuilder(addKnownTypes)

AddToScheme = SchemeBuilder.AddToScheme

)

# 将对象加入 SchemeBuild

func addKnownTypes(scheme *runtime.Scheme) error {

if err := scheme.AddignoredConversionType(&metav1.TypeMeta{}, &metav1.TypeMeta{}); err != nil{

return err

}

scheme.AddKnownTypes(SchemeGroupVersion,

&Pod{},

&PodList{},

}}代码生成Tags #

GlobalTags(对整个group生效): 定义在doc.go中 // +k8s:deepcopy-gen=package (在当前的package中所有的对象都需要生成deepcopy的方法)

LocalTags: 定义在types.go中的每个对象里

// +k8s:deepcopy-gen:interfaces=k8s.io/apImachinery/pkg/runtime.Object// +genclient // +genclient:nonNamespaced // +genclient:noVerbs // +genclient:onlyVerbs=create,delete // +genclient:skipVerbs=get,list,create,update,patch,delete,deleteCollection,Watch // +genclient:method=Create,verb=create,result=k8s.io/apimachinery/pk /apis/meta/v1.Status

实现etcd storage #

pkg/registry/core/configmap/storage/storage.go

// NewREST returns a RESTStorage object that will work with ConfigMap objects.

func NewREST(optsGetter generic.RESTOptionsGetter) (*REST, error) {

store := &genericregistry.Store{

NewFunc: func() runtime.Object { return &api.ConfigMap{} },

NewListFunc: func() runtime.Object { return &api.ConfigMapList{} },

PredicateFunc: configmap.Matcher,

DefaultQualifiedResource: api.Resource("configmaps"),

SingularQualifiedResource: api.Resource("configmap"),

CreateStrategy: configmap.Strategy, // 创建

UpdateStrategy: configmap.Strategy, // 更新

DeleteStrategy: configmap.Strategy, // 删除

TableConvertor: printerstorage.TableConvertor{TableGenerator: printers.NewTableGenerator().With(printersinternal.AddHandlers)},

}

// apiserver 缓存机制的入口

options := &generic.StoreOptions{

RESTOptions: optsGetter,

AttrFunc: configmap.GetAttrs,

}

if err := store.CompleteWithOptions(options); err != nil {

return nil, err

}

return &REST{store}, nil

}subresource #

subresource内嵌在kubernetes对象中,有独立的操作逻辑的属性集合,例如podstatus

Note

不用校验resourceVersion

不用担心被未更新状态的请求覆盖掉

statusStore.UpdateStrategy = pod.StatusStrategy

var StatusStrategy = podStatusStrategy{Strategy}

func(podStatusStrategy) PrepareForUpdate(ctx context.Context, obj, old runtime.Object) {

newPod := obj.(*api.Pod)

oldPod := old.(*api.Pod)

// 更新状态的时候,即使请求里面有spec信息,也会用etcd中的spec信息进行覆盖

newPod.Spec = oldPod.Spec

newPod.DeletionTimestamp = nil

// don't allow the pods/status endpoint to touch owner references since old kubelets corrupt them in a way

// that breaks garbage collection

newPod.OwnerReferences = oldPod.OwnerReferences

}注册APIGroup #

将Group注册到对应的API Handler

// 定义 Storage

config MapStorage := configmapstore.NewREST(restOptionsGetter)

restStorageMap := map[string]rest.Storage{

"configMaps": configMapStorage,

}

// 定义对象的 StorageMap

apiGrouplnfo.VersionedResourcesStorageMapI"v1"]= restStorageMap

// 将对象注册至 API Server(挂载 handler)

if err := m.GenericAPISeryer.InstallLegacyAPlGroup(genericapiserver.DefaultLegacyAPIPrefix, &apiGrouplnfo); err != nil {

klog.Fatalf("Error in registering group versions: %v', err)

}代码生成 #

- deepcopy-gen: 为对象生成 DeepCopy 方法,用于创建对象副本。

- client-gen: 创建 Clientset,用于操作对象的 CRUD。

- informer-gen: 为对象创建 Informer框架,用于监听对象变化。

- lister-gen: 为对象构建 Lister 框架,用于为 Get 和 List 操作,构建客户端缓存。

- coversion-gen: 为对象构建 Conversion 方法,用于内外版本转换以及不同版本号的转换。

依赖工具

BUILD_TARGETS = (

vendor/k&s.io/code-generator/cmd/client-gen

vendor/k&s.io/code-generator/cmd/lister-gen

vendor/k8s.io/ code-generator/cmd/informer-gen

)生成命令

${GOPATH} /bin/deepcopy-gen --input-dirs {external-version-package-pach}

-O zz_ generated.deepcopy \

--bounding-dirs {output-package-path} \

--go-header-file ${SCRIPT_ROOT}/hack/boilerplate.go.txt参考链接: